yaws

yaws is a harmonized environment-neutral open source HTTP server capability.

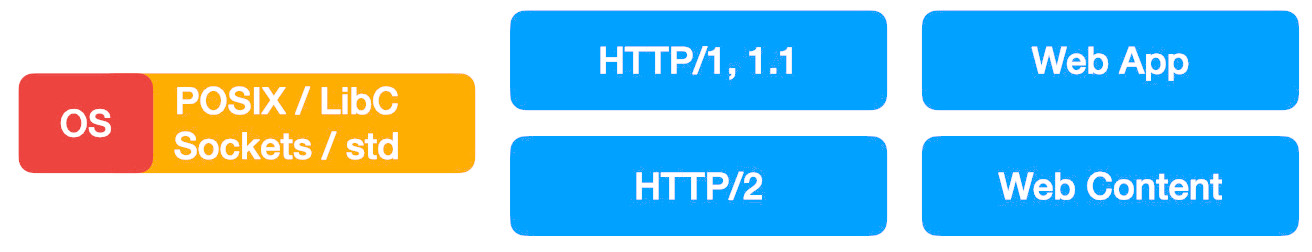

Traditionally Web Servers are monolithic in nature with the tight coupling of std/environment.

yaws de-couples the construct in a way that HTTP servers are environment-neutral in nature.

Architecture

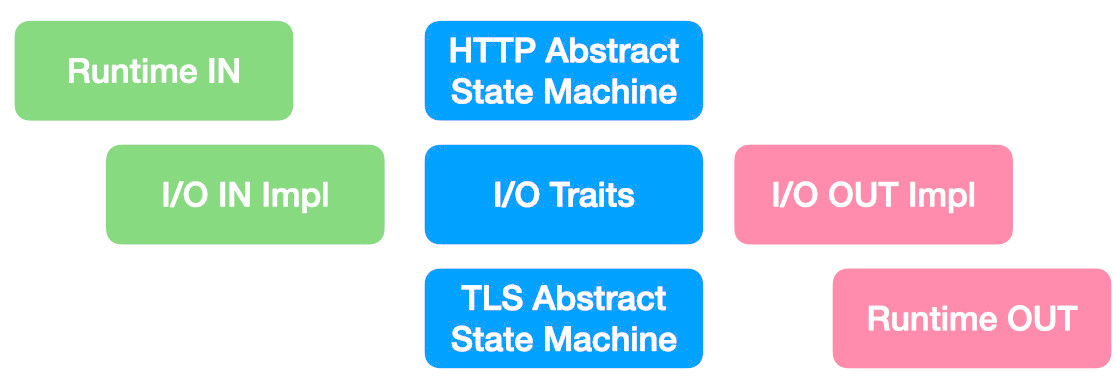

yaws is driven through it's traits by providing implementation of Input and Output.

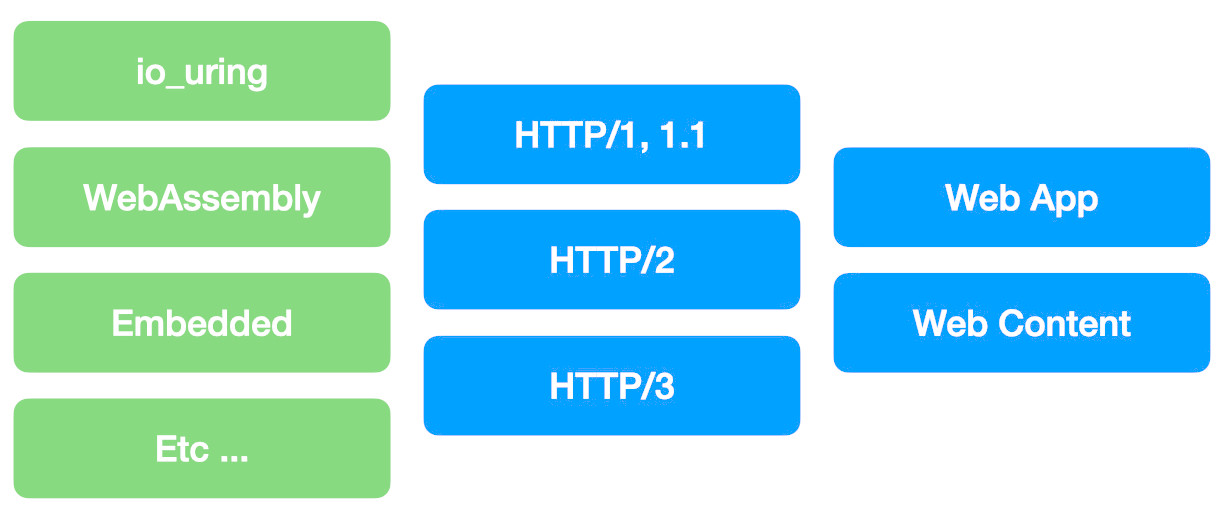

Flavors

yaws reference runtime implementations are called flavors.

yaws provides initially the reference runtimes or flavors for:

- Lunatic Rust+Erlang+WebAssembly Guest-side

- io_uring Linux io_uring Completion Host-side

Binary Runtime

User can run any of the flavors directly as binary through cfg(yaws_flavor).

This cfg can be either supplied through .cargo/config.toml or through --cfg via the top-level binary.

io_uring Binary

$ RUSTFLAGS="--cfg yaws_flavor=\"io_uring\"" cargo run --bin yaws

Lunatic Binary

$ RUSTFLAGS="--cfg yaws_flavor=\"lunatic\"" cargo run --bin yaws --target wasm32-wasi

Library Driver

User can implement the I/O traits in conjuction with the abstract machines implementing HTTP.

Refer to the API documentation or see the existing reference runtimes or flavors for examples.

Validation

yaws Validation consists of the below:

- HTTP Protocol conformance against h1, h2 and h3 specs.

- HTTP-TLS conformance

Given yaws is organized around it's traits, we validate all the implementations through the trait implementations requiring no I/O.

Security

Supported Versions

We, as the yaws maintainers, strive to support all previous versions of yaws unless declared End-of-Life.

Typically -1 major SemVer versions are to be supported at very least when stable.

Unstable versions prior to the stable major 1 are to be supported through -2 minor releases.

Reporting

Please contribute security related issues by e-mail to security@TBD prior to any disclosure.

We ask that contributors give us maintainers a reasonable time to address any issues.

Maintainers will work collaboratively with any contributor/s in order to verify and fix any encountered issues.

Contributors are not required to write a PoC or a fix.

Nonetheless initially private contribution would be most welcome and helpful prior to disclosure if so.

Disclosure

We will publish and credit contributors relating to all the security issues through RustSec which feeds into various security databases independently.

Performance

yaws Performance testing consists of the below:

- Low/High load memory

- min+avg+max latency

- Protocol I/O less performance

- HTTP-TLS

Cryptography

yaws HTTP-TLS uses rustls >0.23 behind the scenes.

It can be configured to various providers implementing CryptoProvider.

Downstream user does not need to be concerned of the TLS implementation detail.

Yaws Sans-I/O Overview

Yaws de-couples all the I/O from the rest of the state machine with the follwing set of traits with differing set of implementors.

The below traits are intended to be implemented by both the state machines & runtimes.

And the I/O traits are intended to be implemented by the runtimes handling I/O:

| Trait | Description |

|---|---|

| NoLeft & Left | "Left" side of the state machine/s |

| NoRight & Right | "Right" side of the state machine/s |

Yaws BluePrint

Yaws Blueprints represent the static blueprints of intended state machine configuration used to instantiate Orbits running the state machines.

Typically a runtime (such as yaoi) provides "injection point" to both instantiate and advance instances of chained up state machines forward with the I/O managed by the runtime.

A static collection of configured BluePrint is typically injected through runtime for each accepted or connected TCP Client.

Yaws blueprints are designed to be used in either std or no_std as well as alloc-optional environments.

Configuration

See example on how a HTTPS pipeline is configured which is then injected for the instantiated client/server contextes that bring together chained blueorints.

Each protocol or app take either default configuration or with_configuration upon instantiation into a running set of chained Orbit.

Yaws Orbit

Yaws Orbits are the instantiated state machines from set of blueprints typically run as "pipelines" with the given set of external I/O.

All Orbits are advanced using [Left] and [Right] traits.

See example Orbit implementation advancing the TLS state machine which advances either the client or server TLS contextes.

Within each blueprint composition there must be at least one App layer with the intermediate Layers being optional.

Within the runtime, each runtime makes the decision/s on how to process all the intermediate layers as well as the terminating layers given each runtime is expected to have it's unique I/O characteristics.

Typically all the runtimes first processes the raw I/O all the way from Left to Right (as input) and then secondly provides the final summary (or reduced output) from all the chained state machines through back into the I/O layer, essentially advancing first from Left to Right for input and then from Right to Left for the final output.

Instantiation

A typical chain of Orbit might be instantiated as follows:

fn tls_server_blueprints() -> Result<Blueprints<1, Orbits>, ConfigurationError> {

let tls_config_server =

TlsServerConfig::with_certs_and_key_file(Path::new(CA), Path::new(CERT), Path::new(KEY))

.unwrap();

let server_context =

blueprint_tls::TlsContext::Server(TlsServer::with_config(tls_config_server).unwrap());

BlueprintsLayers::<1>::layers([Orbits::Tls(server_context)])

.app(Orbits::H11Server(H11SpecServer::with_defaults().unwrap()))

}

Which is typically then provided into the runtime upon instantiation of new clients e.g. in yaoi [upon TCP accept]

// uncomment for cleartext only pipeline

// let mut bp_listener: [Blueprints::<1, Orbits>; 2] = core::array::from_fn(|_| tls_server_blueprints());

let mut bp_listener: [Blueprints::<0, Orbits>; 2] = core::array::from_fn(|_| clear_server_blueprints());

// Setup Listener behaviour on_accept

listener

.accept_with_cb(&mut bp_listener, |ud, stream| {

let id = stream.fixed_fd().unwrap() as usize;

stream.run_blueprints(&mut ud[id-1]).unwrap();

})

.unwrap();

Layer

Each Layer, is designed to sit between the actual I/O and the terminating App is typically provided both the Left and Right implementations.

A good way of thinking both the Left and Right side in the context of TLS is that Left is intended for the "Ciphertext" side where as the Right is intended for the "Cleartext" side providing clear isolation from either through the processing.

Given all the Layers can be stacked (or nested) statically on each other they do not know about each other where the runtime is dedicated to passing both the I/O through to advance each of the Layer within the chained instantiated blueprints.

App

An App on the otherhand is designed to terminate the I/O processing pipeline as the last remaining "Layer" typically only interested of the Left side I/O.

Left of State Machines

The Left side of the state machines can be thought as the I/O originating side, produced by the runtime (responsible of managing I/O) and consumed by the state machine/s (responsible of processing the I/O provided by the runtime/s)

As an example in the TLS, we use the Left side as the "Ciphertext" side.

The trait and it's requirements is defined through the blueprint crate.

Requirements / Assumptions

The following requirements / assumptions are currently made as of v0.1.0:

| fn | Description | Assumptions |

|---|---|---|

| [set_]left_in_blocked | Is Left blocked ? | Right will not advance, see Blocking Left section below |

| left_set_lens | Set new lengths for Left buffers | Bounds are respected and checked by the state machines, see chapter Buffering |

| [set_]left_want_[read/write] | Does Left want to Read/Write ? | State machine sets and runtime provides traffic based on the state machine "wants" |

Blocking Left

Often it is desired to "block" the Left side in favor of processing only the Left side, e.g. in TLS negotiation before a layer can operate to transform the Cleartext (Right) traffic into Ciphertext (Left).

For example within the yaoi runtime we stop the Left-to-Right processing at the layer which is blocked to ensure we have completed all the necessary negotiating required before advancing the layers on top, e.g. in the case of TLS to generate Cleartext traffic on top to be transformed into Ciphertext saving memory pressure through the use of intermediary layers and processing in case of failed TLS handshake/s.

Blocking is different from "Readiness" that blocking can be set across the lifetime of the instanted orbit where as readiness is set only once.

Runtime (impl Producer)

The Left side is implemented in the runtime to provide the correct buffering for both the I/O and intermediary layers.

State Machine (impl Consumer)

State machine is provided an implementation of the instantiated Left side by the runtime.

Right of State Machines

The Right side of state machines can be thought as the I/O destination side, produced by the state machine/s and then consumed either by the other layers further through the chain or the final terminating App (for which it is typically then provided as it's Left side from the "below in the stack" Right side)

As an example in the TLS, we use the Left side as the "Cleartext" side.

Requirements / Assumptions

The following requirements / assumptions are currently made as of v0.1.0:

| fn | Description | Assumptions |

|---|---|---|

| wants_right_next_in | State machine wants Right input | Runtime should provide Right side traffic as desired |

Runtime (impl Producer)

Typically within the runtime the Right side is mirrored transparently as the Left side further Right in the blueprints.

Runtimes should manage to keep sufficient intermediary buffering capability as desired by it's users that is first used as the Right side that then gets passed as Left in next cycle.

State Machine (impl Consumer)

The isolation provides that state machines do not know anything about each other but the data is typically transformed forward through it's Right side, typically reflecting what the Left side was.

State machine can generate it's Right side without the Left side, e.g. in various testing scenarios or when replaying recorded I/O.

Runtimes Overview

To provide common highest level denominator of portability within the yaws we've designated the individual "driver" runtimes to provide and ultimately own the I/O within it's own constraints capacity, security and performance profiles.

For example the mechanics of handling I/O in a micro controller (no_std) can be very different than OS-enabled (or host-based) io_uring completion based I/O.

Runtimes (or drivers) are intended to be the only differing part within the ecosystem to provide support within each given environment.

Each runtime, as the ultimate owner and manager of the underlying I/O makes the necessary decisions to provide the appropriate buffering and the scheduling of advancing the blueprints within their given unique environments they operate in.

Only the runtime makes the environment related decision/s e.g. threading (or not) etc. providing the highest level demoninator within the given runtime instead of the state machines having to make such tricky decisions and to add support separately that is provided by the runtime driver/s to operate within various environments and under their constraints.

Buffering

Given the runtimes effectively own the I/O they also own the buffering (and scheduling) mechanics.

Typically runtimes are I/O driven and have to buffer not only the initial Left side but all the intermediary layers as well throughout the processing.

Each runtime is responsible of both managing the I/O and providing the appropriate buffering towards each encountered layer, as described throiugh the underlying blueprints provided to the runtime by the user.

As an example yaoi as an example provides various schemes of buffering depending on workloads, including incremental linux hugetable buffering greatly speeding up continuously streamed large payloads and so on.

YAOI

YAOI or Yer Another Output Input is the runtime for io_uring in YAWS.

It provides host-based processing leveraging Linux io_uring, hugetable and provides several buffering schemes depending on the workloads it's serving.

See the example HTTPs pipeline on how to use it.

It is also the example provided on how to create a driver runtime for the yaws to support more environments and configurations.

State Machines Overview

yaws Layers (or State machines) run statefully typically to transform Left side into Right side in a chain of state machines.

State machines don't know about each others existence and they can be processed as isolated stateful computation units independently and in parallel.

Given the design characteristics state machines should store as little stateful information as possible and process the traffic in streaming fashion.

Users typically instantiate running state machines as orbits through blueprints and then provide them to the runtime driver which advances all the chained state machines in the provided context.

Known Blueprints

Source: yaws-rs/blueprint/known

Given the blueprints have to be typically constructed using same type, we provide a static enum dispatch of all the known blueprints to avoid dynamistic indirection.

TLS

Source: yaws-rs/tls/blueprint.

User typically provides the TLS Layer configuration through it's context within the provided blueprints to the runtime driver.

let tls_config_server =

TlsServerConfig::with_certs_and_key_file(Path::new(CA), Path::new(CERT), Path::new(KEY))

.unwrap();

let server_context =

blueprint_tls::TlsContext::Server(TlsServer::with_config(tls_config_server).unwrap());

The Left side of the state machine is used as the Ciphertext and Right side is the Cleartext side.

The TLS state machine both handles the necessarily handshake and negotiation in the middle and then either encrypts the traffic from Right-to-Left or decrypts from Left-to-Right.

HTTP/1.1

Source: github/yaws-rs/h11spec/blueprint

Currently the HTTP/1.1 is an "App Layer" provided as the terminating layer.

The HTTP state machine only takes the Left side and does not provide any transformation at the moment.

In the future the HTTP state machine will provide transformation where the Right side can implement application functionality over HTTP.

The HTTP state machine is designed to minimally parse the metadata for the sole processing of HTTP layer and will then pass the rest to the application on top.

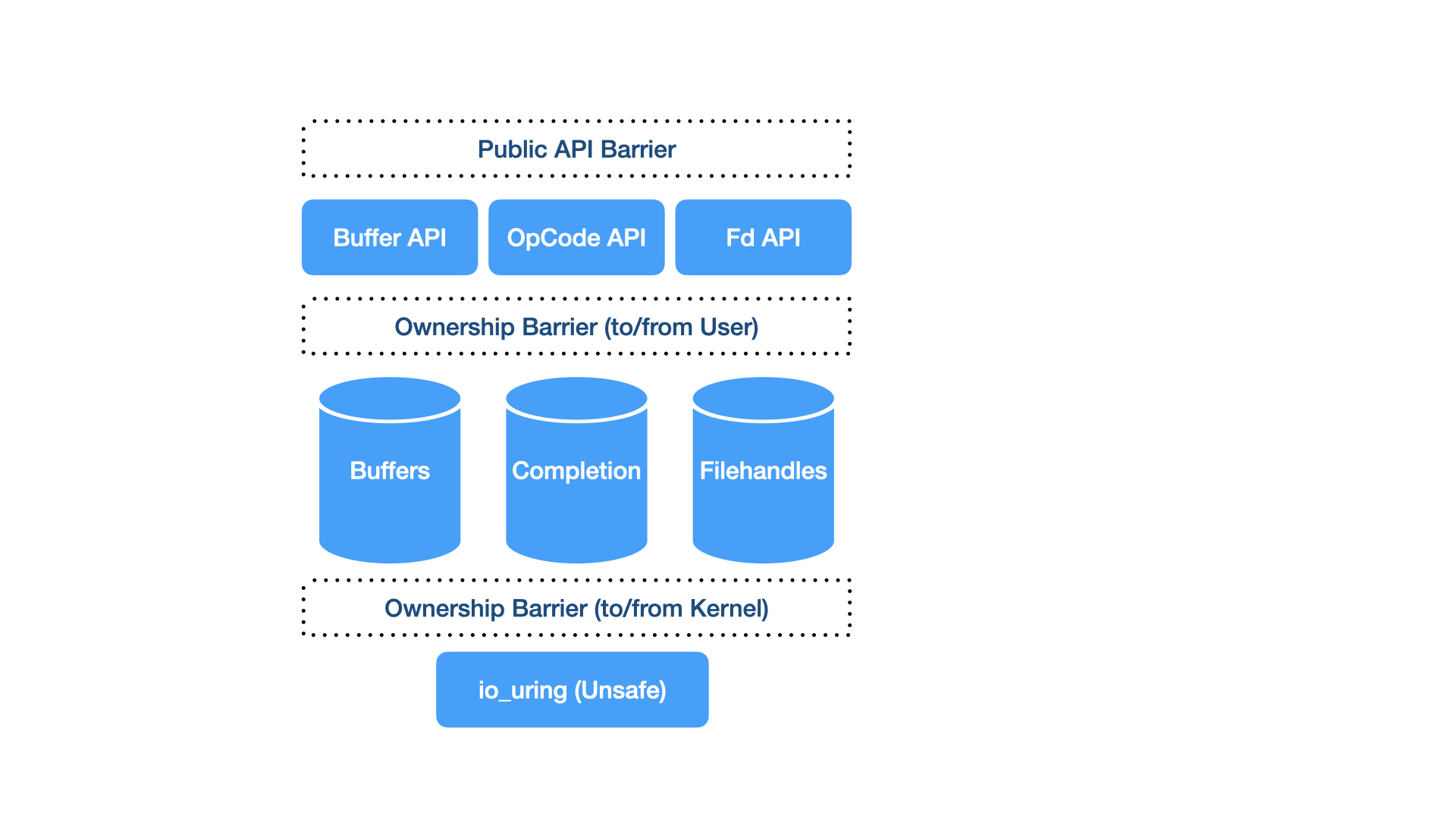

Introduction

yaws io_uring abstracts the underlying low level Linux io_uring library.

We aim to provide safer semantics towards managing all the moving parts given these have complex lifetimes and ownership, typically shared between the kernel, userspace and the user.

Bearer

UringBearer (io-uring-bearer) is the carrier holding all of the io_uring associated generated instances of types.

OpCode

OpCode and OpCode (io-uring-opcode) are the contracts between bearer and individual opcode implementations.

In addition it provides the required extension traits for implementing the individual opcodes.

Ownership

Owner (io-uring-owner) provides the ownership semantics between kernel, userspace and the user.

Filehandles

RegisteredFd and FdKind (io-uring-fd) provides the types representing filehandles within io_uring.

Buffers

UringBuffer (io-uring-buffer) will provide the types representing buffers within io_uring.

Note: As of time writing buffers are still within the bearer but this will change.

Bearer

The crate io-uring-bearer consists of the below main types:

| Type | Description |

|---|---|

| UringBearer | The main carrier type holding all the instaces of Completion, Registered Filehandles & Buffers |

| UringBearerError | The error type for UringBearer |

| BearerCapacityKind | Descriptor used to describe the boundaries of capacities required |

| Completion | The main completion type bringing together all the possible competions |

Associated Built-In Types

The below will be migrated into separate crates, implementing the io-uring-opcode trait later, similar to EpollCtl.

Until then, io-uring-bearer still holds some of the required holding types:

| Holding Type | Description |

|---|---|

| BuffersRec | Holds the actual allocation for the Buffers that either owned by the Kernel or Userspace. |

| FutexRec | futex2(2) -like, Used for FutexWait |

In addition io-uring-bearer still holds some of the individual OpCode Pending / Completion slab types:

| OpCode Type | Description |

|---|---|

| AcceptRec | accept4(2), used for Accept and AcceptMulti |

| FutexWaitRec | Represents FutexWait |

| RecvRec | Represents Recv |

| RecvMultiRec | Represents RecvMulti |

| SendZcRec | Represents SendZc |

Capacity

UringBearer requires bounded capacities described to it.

Leaving things unbounded and without any capacity planning, it would be easy to create opportunities for denial of service, crashes etc. resulting from unbounded capacities at runtime.

All consumers must describe the required capacities through BearerCapacityKind type.

Given that the capacity is described and used at runtime context,

You should test your intended runtime with the described capacity to ensure that the given environment meets it where ever it's deployed in.

Anything that hits the capacity will typically see SlabbableError AtCapacity(setting) variant.

The capacity values are only used when the UringBearer is constructed and are not changeable within it's lifetime after.

See the BearerCapacityKind for an example how to use it along the capacity crate.

Slabbable

See blog which describes the Slabbable trait in general.

Normal Vec etc. would implicitly allocate without warning and invalidate the underlying addresses.

Given that memory addresses need to be kept stable for many things submitted to the io_uring queue,

UringBearer uses Slabbable to back the underlying pending or completed instances of Completion.

Any top-level binary can configure the desired Slabbable implementation through SelectedSlab.

By default the crate currently uses nohash-hasher through HashBrown through slabbable-hash.

Completions

We exploit the fact that Kernel doesn't make any opinion what the userdata should contain for the pending completions by giving each submission a rotating u64 identifier which can then be mapped into the completed type upon completion.

This avoids using any from_raw_parts -like re-construction when we can refer through rotating u64 serial through storage.

We could also use std::mem::forget but we can also model this through the trait giving the option to the user what kind of storage they would like.

Main reason we like to track the items is we that can have some level of ownership / pointer provenance, manage easily any associated data (e.g. regarding the ownership) and more importantly we can construct a gated accessor / barrier to it whilst when for example the kernel has only immutable reference to the said data meaning we can also hold immutable references whilst doing so where as simply forgetting the submitted data.

Not only this but sometimes you might have an arena of buffers (e.g. group of buffers) that are provided to kernel and you must handle the ownership status of window / slice into the part of group of continuous buffers that have been provided back essentially fragmenting the type in some scenarios.

In short, the trait gives us the flexibility and we are able to test that all the implementations uphold the guarantees whilst leaving us easy way to benchmark any possible scenarios through the varying implementation in one go.

OpCode

All io_uring operations or ops are described through their opcodes.

The below opcodes are currently implemented:

| OpCode | Linux Kernel | trait / built-in | Description |

|---|---|---|---|

| Accept | built-in | accept4(2) (single-shot) | |

| EpollCtl | trait impl | Epoll Control | |

| FuteXWait | built-in | Futex Wait | |

| ProvideBuffers | built-in | Register Buffers with Kernel for faster I/O | |

| Recv | built-in | Receive (single-shot) | |

| RecvMulti | built-in | Receive (multi-shot) | |

| SendZc | built-in | Send (Zero Copy) |

All the in-Bearer built-in OpCodes will be moved to implement the associated trait in the future.

All the builti-in & OpCode

To push a bearer-aware submission, use the push_* or push_op_typed methods via the associated UringBearer.

Accept

Accept abstracts the underlying io_uring::opcode::Accept.

This Single-shot Accept provides the source socket address and port within the completion.

If you either don't need the source address and / or port, consider using the AcceptMulti instead.

Note: This is being migrated to implement the OpCode + OpCompletion traits similar to EpollCtl.

Submission

You can currently push single-shot Accept unsafely depending on whether the underlying TcpListener RawFd is IPv4 or IPv6:

To use it safely, user must ensure that the underlying socket is either exclusively IPv4 or IPv6 given the returned source address and structure layout is different depending on which one it is.

If someone needs UNIX sockets, please feel free to send a PR.

Completion

Accept(AcceptRec) will show up as normal through the handler API through UringBearer.

Lifetime (Manual handling)

SubmissionRecordStatus::Forget is safe given the pending Completion was Single-shot.

If the completed record is retained, this will result in memoryleak.

EpollCtl

EpollCtl abstracts the underlying io_uring::opcode::EpollCtl.

You can use io_uring to batch the control calls of Epoll.

For now the separate io-uring-epoll crate also provides a convenient syscall abstraction into epoll_wait which is not yet available through the io_uring interaface over a regular syscall as of now.

If you have millions of sockets that constantly change status, it is helpful to be able to batch the control calls.

See the examples from the io-uring-epoll repository.

Note: io-uring-epoll 0.1 crate is different to 0.2 which implements the new OpCode/Completion traits.

Construct

- Construct associated EpollUringHandler::with_bearer(UringBearer).

- Construct HandledFd representing individual Epoll triggered RawFd

Submission

- Construct EpollCtl::with_epfd_handled(epfd, handle_fd, your_reference)

- Use UringBearer::push_epoll_ctl to push the constructed EpollCtl as submission.

Completion

EpollCtl(impl OpExtEpollCtl) will show up as normal through the handler API through UringBearer.

Lifetime (Manual handling)

You should only Forget the underlying EpollCtl when the RawFd is removed from monitored filehandles list.

Failing to Retain the underlying EpollCtl before removing it will result in undefined behaviour.

FutexWait

FutexWait abstracts the underlying io_uring::opcode::FutexWait.

This can be used, among other use-cases, to combine epoll_wait or other events waiting on another thread with io_uring completions given atomics can be used to generate completion events through FutexWait.

Note: This is being migrated to implement the OpCode + OpCompletion traits similar to EpollCtl.

Construct

You can create the underlying indexed AtomicU32 which will be owned by the UringBearer through:

Alternatively and unsafely you can provide your own AtomicU32 to UringBearer through:

Submission

You can submit a FutexWait through UringBearer::add_futex_wait.

Completion

FutexWait(FutexWaitRec) will show up as normal through the handler API through UringBearer.

Lifetime (Manual handling)

SubmissionRecordStatus::Forget is safe given the pending Completion was Single-shot.

If the completed record is retained, this will result in memoryleak.

ProvideBuffers

ProvideBuffers abstracts the underlying io_uring::opcode::ProvideBuffers.

To do faster I/O it is essential to register (or map) any userspace buffers with the kernel so the kernel can spend less time mapping the userspace buffers between the Recv / Send calls.

Note: This is being migrated to implement the OpCode + OpCompletion traits similar to EpollCtl.

Construct

See the main section about Buffers how to manage these.

Submission

Use the UringBearer::provide_buffers to both submit and associate any created Buffers with kernel-mapped identifiers.

We may later have API to make this more easier to use but for now the user must keep track of the kernel-mapped identifiers.

Completion

ProvideBuffers(ProvideBuffersRec) is provided normally through the handler API via UringBearer.

Lifetime (Manual handling)

The actual buffers are separate from the registering it so the submission can be forgotten after completion safely.

Recv

Single-shot Recv abstracts the underlying io_uring::opcode::Recv.

Note: This is being migrated to implement the OpCode + OpCompletion traits similar to EpollCtl.

Construct

Recv requires a registered filehandle and previously created indexed buffer.

Submission

Use UringBearer::add_recv to submit a Single-shot Recv to kernel.

Completion

Recv(RecvRec) is provided normally through the handler API via UringBearer.

Lifetime (Manual handling)

SubmissionRecordStatus::Forget is safe given the pending Completion was Single-shot.

RecvMulti

RecvMulti abstracts the underlying [io_uring::opcode::RecvMulti](https://docs.rs/io-uring/latest/io_uring/opcode/struct.Recv Multi.html).

Note: This is being migrated to implement the OpCode + OpCompletion traits similar to EpollCtl.

Construct

RecvMulti requires both a registered filehandle and previously registered / kernel-mapped buffer/s with the referred group.

Submission

Use UringBearer::add_recv_multi to submit a RecvMulti to kernel.

Completion

RecvMulti(RecvMultiRec) is provided normally through the handler API via UringBearer.

See Buffers on how to deal with individual "selected buffers" within registered "grouped" Buffers.

Lifetime (Manual handling)

It would be undefined behaviour if the submission record is invalidated before the Multi-shot submission is either confirmed cancelled or timed out.

SendZc

SendZc abstracts the underlying io_uring::opcode::SendZc.

Note: This is being migrated to implement the OpCode + OpCompletion traits similar to EpollCtl.

Construct

Currently SendZc requires the associated indexing (through submission) into:

- registered filehandle (see Filehandles

- registered buffer (see Buffers

- kernel buffer id (see Buffers

Submission

Currently submit using UringBearer::add_send_singlebuf.

Completion

SendZc(SendZcRec) is provided normally through the handler API via UringBearer.

Lifetime (Manual handling)

Extensions

Every OpCode should add:

- It's own separate crate (e.g. io-uring-epoll) and it's own type (e.g. EpollCtl).

- Impl OpCode and OpCompletion from the io-uring-opcode crate.

- Feature-gated "extension" trait within io-uring-opcode similar to OpExtEpollCtl.

- Feature-gated UringBearer::Completion variant, e.g. Completion::EpollCtl within UringBearer.

- Explicit API to push the OpCode as submission, e.g. UringBearer::push_epoll_ctl